Here at BayesianWitch, we’re huge proponents of A/B testing. However, we’ve discovered that the normal method of A/B testing often confuses people. Some questions we commonly get include: How do you know how many samples to use? What P-value cutoff should you pick? 5%? 10%? How do you know what to choose for the null hypothesis? Mastering these concepts are the most critical parts of A/B testing, and yet we find them very unintutitve for the average hacker and marketer to use on a day to day basis.

Another issue with standard A/B testing methods is the the issue of when to end the test. If you are dead certain version A is better than B, it’s great to end a test early. But standard statistical techniques don’t allow you to do this - once you gather the data and run the test, it’s over. For a deeper explaination, I strongly recommend reading Evan Miller’s seminal article How Not to Run an A/B Test.. (Side note - Ben Tilly has a Frequentist Scheme for addressing this problem.)

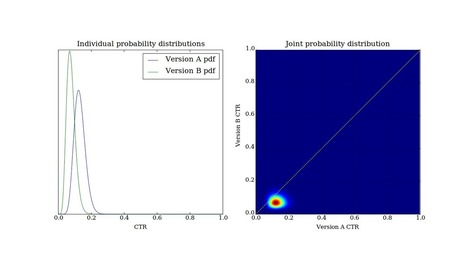

These two factors make it difficult for real life marketers to long-term continue using the standard, frequentist technique. It makes sense to choose a method which is more intellectually intutive as well as one that has flexibility to end a test when the conclusion is obvious. The technique that is described in the rest of this post is the Bayesian technique which avoids these issues. Further, this testing model works extremely well, particuarly in many business situations where time is critical.

I also want to emphasize that the method I’m describing is not untested. A version of it with slightly less accurate math was used at a large news site where I previously worked. A non-technical manager used this script to make changes to email newsletters. Each change provided a marginal (0.5-2%) increase in the conversion rate. But over a few months, he had nearly doubled the open rate and click through rate of the emails through implementing each change.

Your new post is loading...

Your new post is loading...

Your new post is loading...

Your new post is loading...